Book recommendation by Mark Coeckelbergh

| 06. April 2020

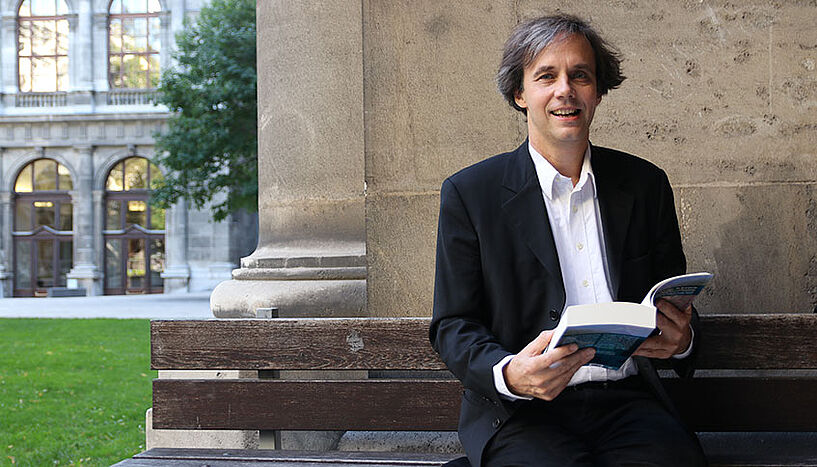

"We need to discuss the ethical issues now. Otherwise we might wake up in a world that nobody ever wanted", says philosopher Mark Coeckelbergh about AI. (© Martin Jordan)

"AI can be used for the exploitation and manipulation of people. We should be aware of this risk today", states Mark Coeckelbergh, philosopher of technology at the University of Vienna. In the interview he talks about his latest book on AI and has also a personal book recommendation for our readers.

uni:view: Recently your latest book, AI Ethics, has been published. What’s it about?

Mark Coeckelbergh: During the past two years there is a lot of interest in the future of Artificial Intelligence (AI). There are many fears and expectations. Many people ask questions about the ethics of AI. Taking distance from science fiction ideas about a far future in which there would be human-like AI, this book gives an overview of concrete ethical issues raised by AI now and in the near future. Based on my experience as member of the High Level Expert Group of the European Commission and other policy works such as the Austrian Council on Robotics and AI (ACRAI), I also discuss what policy makers do on this theme and what their challenges are.

uni:view: Where do we find AI already in our lives – maybe often without even realising it?

Coeckelbergh: When we use apps and web based services like search or buying a book online, we do not realize it but there is AI and data science behind it. AI does not just and not mainly come in the form of humanoid robots or similar machines; it is about software and statistics that are used to analyze our data and sometimes to manipulate our behavior.

uni:view: Your book goes beyond the usual hype and nightmare scenarios to address concrete questions. What are these questions?

Coeckelbergh: They range from questions around privacy and data protection and safety, which we already know from other information technology, to specific questions about responsibility, transparency, and bias. For example, if AI enables more automation and machines such as self-driving cars do things by themselves or recommender systems give juridical advice, who is responsible for the behavior and decisions of these systems? It is also known that AI systems can be biased against particular groups; it is important to discuss such questions and explore what we can do to avoid or mitigate these problems. At the end of the book I also ask about AI and climate change: I think this is an important topic today which is often not sufficiently addressed by policy makers who plan to propose regulation for AI. For example, the current white paper on AI of the European Commission mentions environmental issues and climate, but does not propose effective assessment and measures to deal with it.

uni:view: What are the main challenges for policymakers?

Coeckelbergh: One of the main challenges for policy makers is to decide not only what needs to be done but also who has to do it. I mean, when we’re talking about AI we’re also talking about governments that may use AI and powerful multinational corporations. We can make regulation at national level, but AI is a technology that moves globally. According to me, we therefore also need global regulation. And it is also difficult for policy makers to know when to regulate. Some applications are already running, but with new technologies we don’t always know what will or could happen in the future. In my opinion, it is best to regulate as soon as possible when it comes to ethical issues, in order to avoid problems later. In order to do that, we can compare with regulation of existing technologies. It is also a challenge to involve both experts and make sure that the policy making process is and remains democratic and participative.

Already drawn! Find the books in the Vienna University Library:

1 x "AI Ethics" by Mark Coeckelbergh

1 x "Surveillance Capitalism" by Shoshana Zuboff

uni:view: Some thoughts on your personal book recommendation?

Coeckelbergh: Shoshana Zuboff’s book Surveillance Capitalism is relevant to discussions about one of the potential risks of AI: AI can be used for surveillance, for example via facial recognition. In general technologies AI can be used for the exploitation and manipulation of people. We should be aware of this risk today. I think here it is important to see that it’s not only governments that can do surveillance but that surveillance is also done by the private sector. As individual users we are vulnerable to that risk, and we need regulation to protect citizens.

uni:view: You have read the last sentence, close the book. What remains?

Coeckelbergh: A sense of concern and urgency. AI is already here, and AI is deeply interwoven with our social and economic systems. We need to discuss the ethical issues now and deal with them. Otherwise we might wake up in a world that nobody ever wanted.

Mark Coeckelbergh is Professor of Philosophy at the Department of Philosophy of the University of Vienna. His research focuses on the philosophy of technology and media, in particular on understanding and evaluating new developments in robotics, artificial intelligence and (other) information and communication technologies.